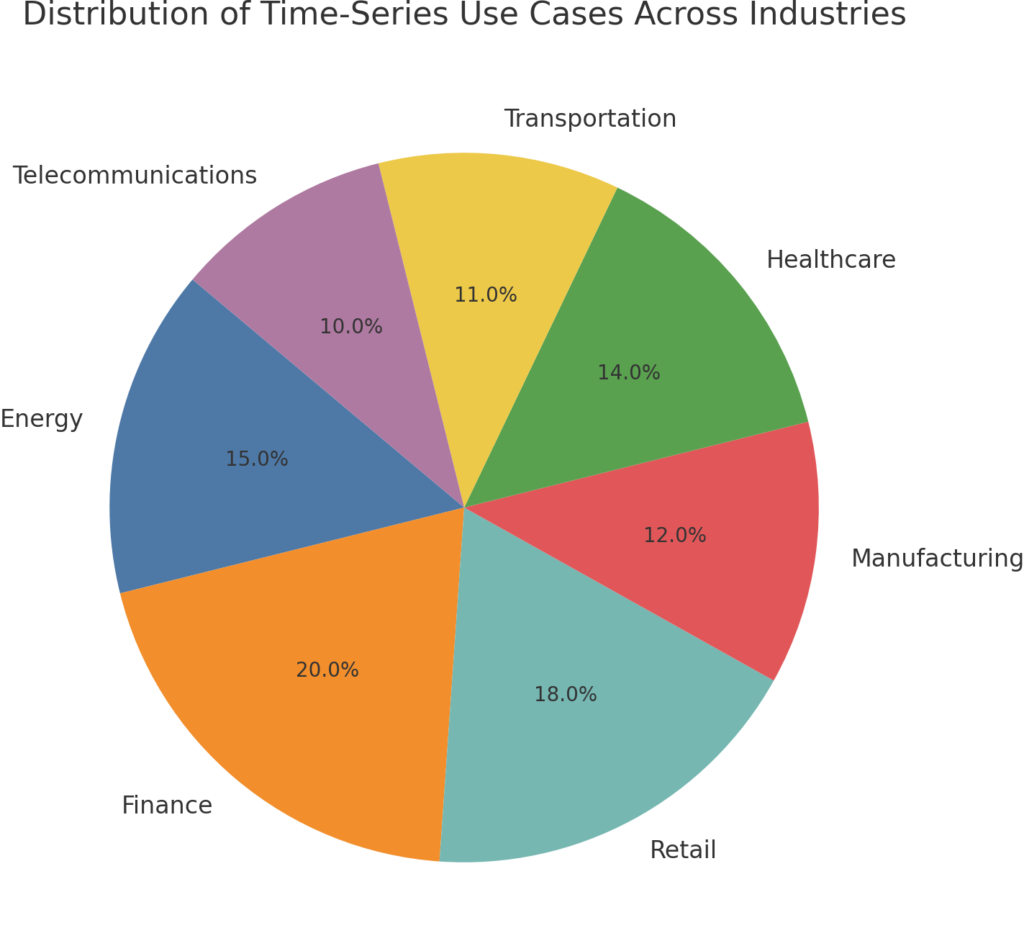

Time-series data is the backbone of predictive analytics, powering applications across industries—from financial forecasting and weather prediction to inventory management and IoT systems. However, extracting actionable insights from time-series data demands more than traditional data engineering approaches. It requires robust pipelines tailored for time-sensitive data processing and optimization. This post explores advanced strategies for optimizing time-series pipelines to enhance predictive analytics, ensuring scalability, accuracy, and efficiency.

Understanding Time-Series Data and Its Challenges

What is Time-Series Data?

Time-series data consists of sequentially ordered data points indexed by time. Unlike other data types, it’s inherently temporal and requires models that account for dependencies between time steps.

Key Challenges in Handling Time-Series Data

- Volume and Velocity: Time-series data streams often come in high volumes and require real-time ingestion and processing.

- Seasonality and Trends: Many time-series datasets exhibit seasonal patterns or long-term trends, complicating preprocessing and model training.

- Missing or Anomalous Data: Gaps and anomalies in time-series data can significantly affect prediction accuracy if not handled properly.

Engineering Pipelines for Time-Series Data

1. Real-Time Data Ingestion

Efficient time-series pipelines start with real-time ingestion systems that can handle high-throughput data streams while maintaining low latency. Systems like Apache Kafka and AWS Kinesis provide robust frameworks for capturing and buffering time-series data from diverse sources, such as IoT devices, financial transactions, and social media streams. Coupling these tools with time-series databases like InfluxDB or TimeScaleDB ensures seamless storage, indexing, and retrieval, allowing engineers to build pipelines capable of handling both batch and real-time workflows.

2. Data Transformation and Feature Engineering

Time-series data requires specialized transformations to extract meaningful features that improve model performance. Key techniques include detrending and deseasonalizing to eliminate underlying patterns that can skew results. Rolling statistics, such as moving averages and standard deviations, highlight short-term trends and volatility, while lag features help capture dependencies across time steps. Advanced feature engineering can also incorporate Fourier transforms for frequency analysis, enabling predictive models to account for periodic behaviors effectively.

3. Handling Missing Data

Missing values are inevitable in time-series datasets. Robust imputation methods include:

- Linear interpolation for small gaps.

- Advanced techniques like Kalman filters or machine learning-based imputations for larger gaps.

4. Real-Time Anomaly Detection

Detecting anomalies in time-series data is critical for ensuring data quality. Tools like Facebook’s Prophet and Python libraries such as PyCaret and PyOD offer scalable anomaly detection frameworks.

Advanced Optimization Techniques

1. Temporal Aggregation

For large datasets, temporal aggregation is an effective strategy to manage computational complexity while retaining essential patterns. By consolidating data into meaningful intervals, such as hourly, daily, or monthly aggregates, engineers can reduce noise and enhance model interpretability. Temporal aggregation also enables efficient storage and retrieval, especially when combined with partitioned data architectures in distributed systems like Apache Hive or Google BigQuery.

2. Model Selection for Time-Series Forecasting

Choosing the right model is crucial. Popular options include:

- Autoregressive Integrated Moving Average (ARIMA): Effective for univariate time-series.

- Long Short-Term Memory Networks (LSTM): Excellent for capturing long-term dependencies.

- Prophet: User-friendly and great for seasonality-rich data.

3. Hyperparameter Tuning

Automated hyperparameter tuning using tools like Optuna or GridSearchCV can significantly enhance model performance. Key parameters include:

- Learning rates for neural networks.

- Seasonal lags for ARIMA.

- Window sizes for moving averages.

| Sales Category | ARIMA RMSE | LSTM RMSE | Prophet RMSE |

|---|

| Pharmacies | 0.15 | 0.20 | 0.25 |

| Railway Tickets | 0.10 | 0.12 | 0.18 |

| Books | 0.08 | 0.09 | 0.14 |

| Sporting Goods | 0.12 | 0.15 | 0.20 |

| Fuel Stations | 0.11 | 0.10 | 0.17 |

Benefits of Optimized Time-Series Pipelines

Enhanced Forecast Accuracy

Optimized pipelines ensure clean, consistent data and models tuned for precision, leading to highly accurate forecasts.

Scalable Solutions

By leveraging distributed frameworks like Spark Streaming or Flink, time-series pipelines can handle massive datasets without compromising performance.

Real-Time Insights

Real-time optimization ensures actionable insights are available when they matter most, enabling faster decision-making.

Future Trends in Time-Series Analytics

Integration with Edge Computing

Edge computing will enable real-time analytics closer to the data source, significantly reducing latency and bandwidth usage. By processing data at the edge—whether on IoT devices, local gateways, or distributed servers—organizations can minimize the time it takes to derive actionable insights. This approach is particularly beneficial for applications like predictive maintenance and autonomous vehicles, where milliseconds can make a critical difference.

Explainable AI (XAI) for Time-Series Models

Explainable AI (XAI) frameworks are set to revolutionize time-series analytics by providing transparent insights into model decision-making processes. Tools like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-Agnostic Explanations) allow engineers and stakeholders to understand why a particular prediction was made. This enhances trust and usability, especially in high-stakes applications like financial forecasting and healthcare.

Quantum Time-Series Analytics

Emerging quantum algorithms promise to revolutionize time-series forecasting by solving problems at unprecedented speeds.

Conclusion

Time-series optimization is not just about processing data; it’s about transforming raw streams into actionable insights. By employing advanced engineering practices and leveraging cutting-edge tools, organizations can unlock the full potential of predictive analytics. Whether it’s forecasting sales, detecting anomalies, or managing inventory, time-series optimization ensures that data-driven decisions are faster, smarter, and more accurate.

Key Takeaway: Optimizing time-series pipelines is essential for organizations aiming to harness the power of predictive analytics in an increasingly time-sensitive world.